I started this project toward the end of Tihar, 2081. I wanted to learn a JavaScript framework - they're all the hype, always. I also really enjoy playing the popular card game called 'CallBreak' (what it's called here, anyway). So, the stage was set for me to make an online version of the game.

I have not had much interest in tackling the 'normal' problems that are more common in mainstream software (a term whose popular definition/perception I think has changed dramatically over the years) - problems in the realm of databases and multiple concurrent users, realtime interactions, etc. Those challenges have historically sounded pretty foreign and (thus?) bland to me. As a result, I've not worked much in the web world apart from a few occult projects that by their very nature had to have some aspect of the web (for example, PhoneMonitor). I have written the API backend for an as yet to-be-announced app called EzRestro, but not the complete front-end. So, this would be the first full web app from me.

I naturally started from the backend because I feel like it's mostly just straight-up coding the functionality you anticipate you'll need in your app, with a bit of DB design. For a startup project, the DB design decisions are not that crucial as long as they're reasonable. Having used ASP.NET Core WebApi with npgsql-backed EFCore as the ORM (code-first) for EzRestro's backend, it was the most natural choice for me to get going quickly. This time, I wasn't trying to learn .net core webapi, so I was only concerned with the front-end. As long as the backend served its purpose, I would be okay. Going back to the decision-making process before implementing the backend for EzRestro in .net, I had naturally wanted to opt for PHP owing to its wide availability in all shared hosting providers' machines and my past acquaintance with it; but following persuasion from a friend from work, a healthy dose of underestimation of PHP's realtime capabilities, coupled with the luxury of having a home server with Proxmox and familiarity with container technologies (Docker and LXCs), I had finally agreed upon .net. This time as well, I knew I could depend on the now familiar ASP.NET Core.

Most of the backend - API and the DB design that I took hand-in-hand was wrapped up fairly quickly in a matter of a few days to maybe a week. The backend did see a few changes as I came to flesh out the front-end, but the bulk of it was over way before I had even started work on the front-end, which I knew it would be a great chance to learn a new technology in web dev. I initially tried to buck that though by trying to do it all in plain old JavaScript; but I quickly realized that it would be too painful and messy, especially for someone who has not done much JavaScript. It's easy to get going but it is way too easy to create spaghetti code. So, I went JS-framework shopping, savoring every bit of it - for days. I liked the idea of Svelte. It was relatively new, but it worked by compiling to JavaScript, rather than gluing its engine to the product. It looked a lot cleaner and more intuitive (on the surface, at least) than other frameworks like Vue (some experience while writing a hybrid app for EzRestro using Ionic/Capacitor) and mostly React. The built-in, intuitive router in SvelteKit compared to Vue and React was a huge deal as well. Listened to Rich Harris, the guy who started it all, liked his overall take on reactivity and enjoyed hearing about his journey from being a graphics designer to a developer. Using third party libraries also seemed less painful than in, say, React. The official Svelte 5 tutorial was very bite-sized and digestible as well. I later realized that it hadn't taught me enough, but I guess that's what the docs are for; but it was great to get started and build something quickly. Talking about the docs, I found that to be a pain-point beside the confusion created by the fairly recent Svelte 5 release - too much old code lying around on the web. Later, when I was knee deep in Svelte, I observed that no query I typed into Google would lead me to the relevant documentation page. More generally, the community around Svelte - especially Svelte 5 - is noticeably smaller than other frameworks.

Anyway, I got to learn a lot about the travails of front-end development. The ups and downs of learning a new technology aside, the design aspect of it was what was so confusing to me. Where should I put this component? What should the overall layout of the webapp look like? How do I center this element here? Why is it not working? How is this so hard? That's the design of the visual aspect, the UI. Being new to a JS framework had its own share of problems to bestow on me. The details of how the data should flow between pages and components, how to best manage state between routes, how best to organize related functionalities, all these and more were definite hurdles I had to grapple with. The sheer lack of enforcement of strong opinions regarding how things should be done in client-facing web development, at least from what I perceived, was debilitating. This was unlike anything I'd come across during traditional Win32 WinForms/WPF or service development. Here, the UI or visual component I was designing was inextricably tied with the logic backing it up, and there was no generally widely agreed upon convention to guide me along. I'm not sure if that made much sense to the reader but I felt a definite distinction between the software development I had done before, and this time. I could almost feel it strangling the joy away from the experience of programming. I'm sure people proficient in front-end development will disagree with my assessment, but it is what it is. To make a good looking, consistent UI isn't hard in, say, native windows application development. I have a general sense of all the UI elements that will be needed, where they need to go, etc. Doing that for the web? You get a blank canvas. Define your own UI - whatever you want, however you want, with CSS and HTML. For someone not well versed in basic UI patterns for the web, it gets overwhelming. I'm not sure how I would manage if not for shadcn-svelte components and blocks.

All that notwithstanding, I was finally able to hammer out a half decent web app powered by Svelte 5 and .net 8.0 in roughly a month.

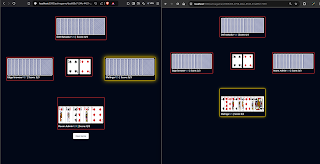

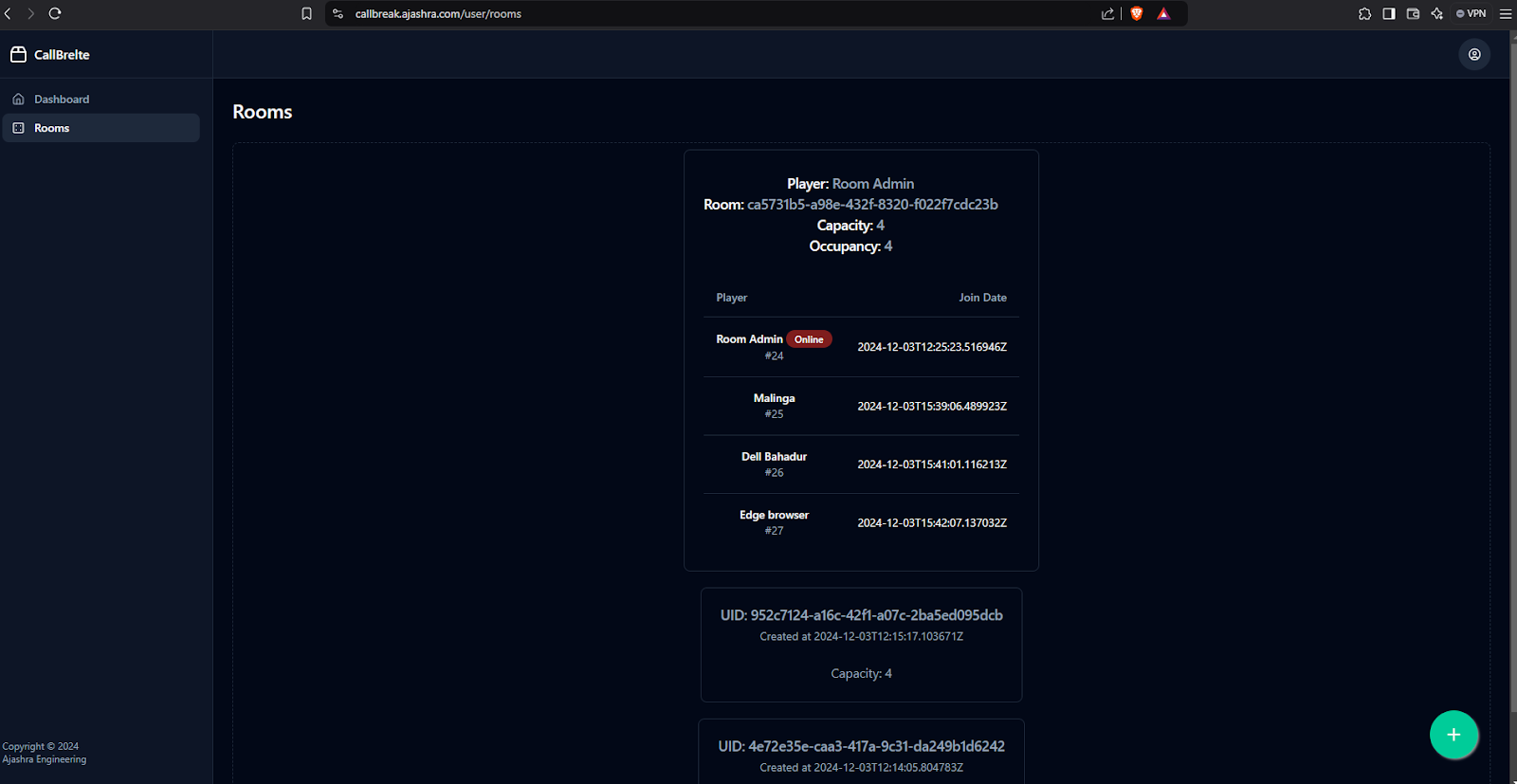

The deployment part is interesting in itself, and equally enlightening to me, but about that in the MDD file towards the bottom of this article. First, the screenshots:

That's all for the pictures. The application is deployed and is available for anyone to try at

The code, as of now, is hosted as two repositories - one for the Svelte app, another for the API - on GitHub, but is private. I may make it public later.

Brought in from old README.md:

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Specifications:

1. User:

- Ability to create a new room

- If a room is already active, enter it when the user logs in to /user

- Ability to start and end games (only one active game at a time)

2. Player:

- Ability to enter a room and join a game

- Ability to make moves in the active game of the room

3. Room:

- Only one active game at a time

- Home of players

- Created by a user, who becomes the room admin

4. Game:

- Only one active at a time in a room

- Created by room admin

- Collection of players

======

Flow:

======

1. A user creates a room, notes down its UID. The user is the room admin

2. A stranger enters a room with its UID, becomes a player with a UID

3. The admin creates a new game in the room with selected players. A new entry added to Games table

4. The admin starts the first round of the game. A new entry added to Rounds table

5. A random player of the game gets the first turn

6. Cards dealt to all players of the game, starting from the first-turn player. New entries to Turns table for each player, with Type=0 (0=deal, 1=play), with Hand={dealt cards}=as a rule, cards at hand at the end of the turn

7. For the active game's active round, the player after the last entry to the Turns table gets a notification to play a card

8. The player selects a card and POSTs to /api/game/playcard with his player UID. The endpoint must guard against out-of-turn requests and out-of-hand card plays

9. A new entry is added to Turns table for the player with his Hand reduced by the played card, and Play containing his played card. Determine the RoundId for the entry before adding

10. Send a notification to all players of the game advertising the played card

11. Check if the round is over. If not, goto #7

12. The round is over; determine the winner for the round; update the current Round entry in the Rounds table with the winner PlayerId

13. Send a notification to all players of the winner of the round

14. Check if the last turn for the game had an entry with Hand==Play

15. If no, goto #4

16. Tally final scores for the game using the Rounds table

A table that holds definite states of a Game could be beneficial, though not completely necessary. This is primarily to deny out-of-turn and out-of-order requests, such as playing a card before declaring one's anticipated score.

But this kind of validation can be done based on other information from various tables.

Regardless, a table - say, Scores - to record players' declarations for anticipated scores, as well as actual final scores when the game is over, is needed. It should have columns for the GameId, PlayerId and AnticipatedScore.

After cards have been dealt in the first round of a game, a notification should be sent to each player, in order, asking for their declaration.

Each player hits an endpoint to do this when it's their turn to do the declaring. The endpoint should create a new entry in this new table for each player.

Nov 18, 2024 | 7.37 PM

-----------------------

When reading the API response given by .net backend as a JSON, make sure to use lowercase for the names of the properties in Java/TypeScript regardless of the case in .net side code.

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Friday, November 8, 2024 3:37:57 PM

==========================

Don't mark your load() function (in +page.ts or +layout.ts) as async. Don't 'await' inside it. Doing {#await ...} on the returned value of the load() function in such case will cause the page to not render until the load() function is done. Just return the unresolved Promise, either directly or as a nested property, but NEVER 'await' inside load()

Friday, November 15, 2024 8:55:54 PM

==========================

I lost a 6 hours (based on my Brave browser history to troubleshoot the issue, from 3 PM to now, almost 9 PM) to this issue in .NET 8.0. The issue is as follows:

Doing a POST request to the following action method via JavaScript fetch abruptly crashed the application:

************

[HttpPost("googlesignon")]

public async Task<IActionResult> GoogleSignOn([FromBody] string googleJwt)

{

// doesn't matter whatever's in here..

}

************

The problem occurred only when the POST body was not well formed enough for the runtime to assign to the parameter "googleJwt". So, a standard validation error response inevitably followed. That much would be understandable, and expected actually, but the problem was that the app would just exit - sometimes with an exit code of 0 and sometimes with 0xffffffff - no exception, no nothing. I scoured the internet for everything around this topic, ChatGPT'd it, but there's absolutely nothing on this. Tried selecting different breakpoint options for different types of exceptions in Visual Studio. Created another controller with the same kind of action method to test it out - the same result. Tried changing HTTP/HTTPS, nothing. Tried whatever suggestions ChatGPT gave me, nothing worked. Changed the web browser, nothing. Removed the injected dependency interfaces, nothing.

Peculiarly, this didn't happen with requests from Postman - the validation errors 400 and 415 did occur, depending on what payload I sent with the POST request to the endpoint, but it didn't crash the server application. My front-end JS code though, did, and without fail. Any kind of validation error for the googleJwt variable would inevitably crash the process no questions asked. Obviously this is an unacceptable behavior from a server application. So, I thought maybe .NET 8.0 was to blame, so I even scoured the internet and the dotnet repo on GitHub for related bugs, but nothing. I hadn't noticed this behavior before though, so I thought maybe the runtime was corrupted or something. So, I opened up the Visual Studio Installer, and updated all, not really sure if that would also repair the installed runtime. It then asked me to restart my computer. I did. Apparently, it had installed .NET 9.0. I opened up the same solution again (didn't change the target framework, still 8.0) and tried it again, and lo and behold - the weirdness had stopped.

I used to think that the .NET runtime was an epitome of robustness and consistency, especially in a server-side setting with high reliability requirements; but this incident has made me realize that not even a giant such as .NET always works as expected, for whatever reason.

I guess sometimes a restart is all it takes. ¯\_(ツ)_/¯

Wednesday, November 19, 2024 12.19 PM

========================================

It happened again yesterday at around 11 PM. It was late, so I just let it be and shut down the computer. Not sure what's causing it.

Anyway, I'm in a bit of a pickle. In my /player route, I have a +page.ts load() function that connects to SignalR hub with server and registers client-side callbacks and all that

stuff. It also loads the players in the room as a data prop back to +page.svelte as a promise so that the UI can display a loading text until the promise is resolved using the standard

{#await} construct. The problem is, I would like to update the UI with any extra players that join the room in realtime using the registered signalR callbacks.

The easiest way in theory is to reload the page so that the same load() function runs again and returns an updated list of players. To do that, if I include the invalidateAll() method call

inside my PlayerCameOnline() handler, every time this callback is called by a new player coming online, it apparently triggers re-registering (aka invocation of EnablePushNotifications(playerUid) method on the server) with the server's signalR hub

(after a connection is established with the server, a server-side method EnablePushNotifications(playerUid) is invoked by the client, which checks if the presented playerUid

is already in the online players list, and if so, the connection gets booted. this mechanism is intended to prevent multiple connections for the same playerUid.)

This leads to the first player being kicked i.e. their connection is closed because apparently, the invalidateAll() call doesn't entail first disconnecting from the hub, but

instead leads to another connection being created, re-registration being triggered and ultimately being disconnected despite having a perfectly good connection.

This leads me to believe that either a) I need to first disconnect before calling invalidateAll() b) avoid calling invalidateAll() completely, and find out a way to make

it work with some combination of a custom store and rendering data from it in the UI of +page.svelte instead of the data returned by the +page.ts file's load() function.

The thing is, the data retrieval approach using the load() function allows {#await} to gracefully display a "Loading..." text until all of it is available to show on the UI.

Displaying data from the store and updating the store inside the registered callbacks doesn't mix well with the UI setup to display data from the load() function.

The internet mostly agrees that invalidateAll() is not the way, especially as I've found out when signalR connection is a big factor to consider.

3.35 PM

=========

I've observed that it's better to avoid passing data props from +page.ts or +layout.ts files' load() functions to +page.svelte file unless absolutely necessary.

Just display a "loading..." placeholder and load your data (by calling api's) asynchronously inside your +page.svelte, saves headaches.

4.18 PM

=========

The concept of a player being "Online" isn't present at the DB level. It's purely a SignalR thing.

9.10 PM

=======

shadcn-svelte is a mess. when it works, it works and looks great. but mostly, it's more like a hobby project that's sure to get on your

nerves more than anything. things are constantly changing and breaking. for a lot of components and "blocks" that they provide the code for, you

have to troubleshoot the code yourself - so many of them don't work out of the box. it's sure to leave you pulling your hair out.

for now, I'd advise any newcomer to Svelte looking for an easy to use component library to avoid shadcn-svelte till it matures or dies(would be a shame)

there's many other seemingly more mature libraries though arguably not as sleek and polished as shadcn.

beercss surprised me. yesvelte looks alright and it's at least complete. skeletonUI looks like a toy.

flowbite looks alright as well, but they don't give blocks for free. daisyUI is fine as well.

November 20, 2024 | 3.17 PM | Wednesday

========================================

The code for the "blocks" shown in shadcn-svelte didn't work because I had overlooked the following line in svelte.config.js:

alias: {

'@/*': './path/to/lib/*'

}

And I hadn't modified it correctly, apparently.. SMH.

I had scoured svelteUI and flowbite and considered tailwindUI's free framework-independent(read: html with tailwind classes) components (specifically, stacked application shell layout) instead of shadcn. I also managed to find out during this whole-day search operation about bits-ui and how it looks so similar to shadcn-svelte. All this time, the weird errors I was getting were down to the path to the /lib folder not being correctly put into the svelte.config.js file.

November 21, 2024 | 4.20 PM

=============================

Setting a value to a Svelte store that's got a subscriber (such as a store that keeps in sync with localStorage) will have a delay between setting the store value and the change reflecting in whatever the store is syncing with (if it's syncing with an external storage that is, which is localStorage most of the time). If the store is syncing with localStorage, which could be a common practice, be careful that $someStore='some value'; will not immediately reflect on the localStorage - it's an asynchronous process. One SO post on the topic: https://stackoverflow.com/questions/73081344/svelte-store-not-updating-subscribed-value-synchronously

5.26 PM

========

I think I'm wrong in saying that the syncing processing with localStorage is asynchronous. I stepped through my code and see that assigning a value to my store variable does in fact iterate through all the subscribed callbacks, only then moving to the next statement. My problem was that I was manually putting in values inside my localStorage as a shortcut, so it was creating inconsistencies in my web app, specifically, it was looping non-stop between the login screen and the logged-in screen because the appropriate state variable wasn't actually getting updated because I was changing it externally. In a real world scenario, that wouldn't happen.

Oops! That's not entirely correct either. Even when I externally modify the localStorage key's value for the store, my button press (to leave the current room i.e. to delete the playerUid information from store hence localStorage) correctly triggers the update in the store and thus the localStorage key's value. But after that is the code to goto('/') i.e. to go back to the root path of the url. There, the page is supposed to pull in the freshly emptied playerUid from store hence localStorage, but apparently, the root page's +layout.js file's load() function - which returns the value of the store hence localStorage, that +page.svelte file depends on - isn't being called. As you can imagine, the +page.svelte is gonna be assuming the same old value for the playerUid, which is not gonna be empty, and hence it's gonna goto('/player') that at this point doesn't have a corresponding sibling +page.js or +layout.js or similar files with exported load() functions, only onMount(), which correclty reads the emptied store value for playerUid and redirects back to '/', causing a loop.

Here's the question posed to chatGPT, since I struggled to find answers related to the mechanics of the load() function - Svelte being Svelte (small community, rapidly changing):

Q: when i'm using goto('/') to programmatically navigate to the root of my web app, its +layout.js's load() function isn't being called. why?

A: In SvelteKit, the load() function in +layout.js (or +layout.ts) is not automatically called when navigating programmatically using goto() if the framework determines that the data or state hasn’t changed. This behavior is due to client-side routing optimizations in SvelteKit, which avoid reloading layouts or pages unnecessarily.

Here's why it might happen:

Cached State: If the current data in the load() function's context is still valid (e.g., based on unchanged parameters, session, or the request), SvelteKit might skip re-executing the load() function.

Already on the Page: If the application is already at / and you call goto('/'), SvelteKit recognizes no actual route change and doesn’t re-trigger the layout or page load functions.

How to Ensure load() Is Called

Force Invalidating the Load Function: Use the invalidateAll() method to revalidate all load() functions in the hierarchy:

javascript

Copy code

import { invalidateAll } from '$app/navigation';

await invalidateAll();

await goto('/');

Invalidate Specific Data: If your load() function depends on specific data (like session state or query parameters), use the invalidate() method to force SvelteKit to refetch data related to that specific dependency:

javascript

Copy code

import { invalidate } from '$app/navigation';

await invalidate('some-key-or-path'); // Replace with your specific dependency

await goto('/');

Ensure State Dependencies Are Dynamic: If your +layout.js's load() function depends on state, make sure that any changes to that state trigger revalidation. For example, check if your load() relies on query parameters or the session, and ensure those change to trigger the reload.

Alternative: Full Reload: In extreme cases where the above doesn’t work or isn’t desirable, you can force a hard page reload to reinitialize the entire app:

javascript

Copy code

window.location.href = '/';

Debugging Tip

Use console.log inside your +layout.js's load() function to verify whether it’s being called. If it isn’t, investigate the inputs (like session, parameters, etc.) to identify why SvelteKit considers them unchanged.

Let me know if you’d like a more specific example tailored to your setup!

6.08 PM

=========

Looks like the SvelteKit tutorial does address this in Advanced Loading/Invalidation and Custom Dependencies. The idea behind custom dependencies is unbelievable, and I don't mean that in a good way.

Excerpt:

**********

When the user navigates from one page to another, SvelteKit calls your load functions, but only if it thinks something has changed.

In this example, navigating between timezones causes the load function in src/routes/[...timezone]/+page.js to re-run because params.timezone is invalid. But the load function in src/routes/+layout.js does not re-run, because as far as SvelteKit is concerned it wasn’t invalidated by the navigation.

We can fix that by manually invalidating it using the invalidate(...) function, which takes a URL and re-runs any load functions that depend on it. Because the load function in src/routes/+layout.js calls fetch('/api/now'), it depends on /api/now.

**********

Another one:

*********

Calling fetch(url) inside a load function registers url as a dependency. Sometimes it’s not appropriate to use fetch, in which case you can specify a dependency manually with the depends(url) function.

Since any string that begins with an [a-z]+: pattern is a valid URL, we can create custom invalidation keys like data:now.

Update src/routes/+layout.js to return a value directly rather than making a fetch call, and add the depends:

*********

From the docs:

************

You can also rerun load functions that apply to the current page using invalidate(url), which reruns all load functions that depend on url, and invalidateAll(), which reruns every load function. Server load functions will never automatically depend on a fetched url to avoid leaking secrets to the client.

A load function depends on url if it calls fetch(url) or depends(url). Note that url can be a custom identifier that starts with [a-z]::

import type { PageLoad } from './$types';

export const load: PageLoad = async ({ fetch, depends }) => {

// load reruns when `invalidate('https://api.example.com/random-number')` is called...

const response = await fetch('https://api.example.com/random-number');

// ...or when `invalidate('app:random')` is called

depends('app:random');

return {

number: await response.json()

};

************

I've found out that adding the depends() with a custom string that looks like a url does cause the load() function in my +layout.js file to re-run when I invalidate() the same string from my '/player' route's +page.svelte, which reads the updated value of the playerUid store, but weirdly, the actual +page.svelte for my '/' route itself doesn't run anymore now. I don't expect to find an answer to why anymore. I so wish I had used another, more mainstream framework for this app. For all its beauty, there's a lot of ugliness - both visible/documented and invisible/unexplored hiding behind it.

6.41 PM

========

I tried another thing - since invalidate() apparently returns a Promise, I thought maybe I ought to wait for it to complete before navigating to '/'. So instead of:

function OnLeaveRoom() {

$playerDetails = ''; // clear the player's uid from store

invalidate('some:playeruidstorevalue'); // force the load() function for the root page to re-execute. SMH...

goto('/');

}

I did:

async function OnLeaveRoom() {

$playerDetails = ''; // clear the player's uid from store

await invalidate('some:playeruidstorevalue'); // force the load() function for the root page to re-execute. SMH... EDIT: the 'await' is critical here for some reason..

goto('/');

}

....aaaaand VOILA! It no longer skipped rendering the actual root +page.svelte. It ran the load() in the root +layout.js because of the depends('some:playeruidstorevalue'); line in there (which can be any url-like string according to the docs), and then ran the +page.svelte page's code as well, which was exactly what I wanted!

Alright, that's enough learning and troubleshooting for today. Didn't get to actually work on my UI. Will continue tomorrow.

Nov 22, 2024 | 12.13 PM

========================

I feel like I've overused the +layout.js files' load() functions. Source of so many headaches and hidden behaviors.

5.42 PM

=======

Passing data from child pages to the parent +layout.svelte (for example to change the title of the page in the parent +layout.svelte as the user navigates to different tabs)

is not even addressed anywhere in the Svelte tutorial or the Docs. I found an SO post from 2021: https://stackoverflow.com/questions/68464777/in-sveltekit-how-to-pass-props-in-slot-using-layout-svelte-file and it recommends using the set/getContext functions. Passing data from slots to containers is discussed in the docs/tutorial but not for +layout.svelte

Oh, found another one from around the same time, telling to use the store: https://stackoverflow.com/questions/70927735/pass-variable-up-from-page-to-svelte-layout-via-slot

There's another one that makes more sense and seems more "proper": https://stackoverflow.com/questions/76982787/provide-data-to-main-layout-from-any-route-in-sveltekit

Apparently, just return the required data from the child +page.ts file's load() function and use the special $page store to get its 'data' property that apparently holds all the properties returned from all the child load() functions. Who knew.. Maybe the docs should have been more explicit about this use case?:

[From Svelte 5 docs: https://svelte.dev/docs/kit/load#$page.data]

*****************

$page.data

The +page.svelte component, and each +layout.svelte component above it, has access to its own data plus all the data from its parents.

In some cases, we might need the opposite — a parent layout might need to access page data or data from a child layout. For example, the root layout might want to access a title property returned from a load function in +page.js or +page.server.js. This can be done with $page.data

****************

Nov 25, 2024 | 10.43 AM | Monday

======================-=-========

I have created a separate component to display all the players in a room where a given playerUid belongs. The component handles the process of creating a signalR connection when mounted and closing it when destroyed, handles real-time updates of showing which players are online and offline. I placed it directly inside the +page.svelte of the /player route, and it works as expected. When another player of the room comes online, it shows an "Online" badge next to their name, and when they go offline, the badge is removed. But when trying to place the same component inside a shadcn-svelte component called "Collapsible", inside the Collapsible.Content tag, it appears that the reactivity system is suspended. No realtime updates occur when inside the tag. I moved it outside Collapsible.Content, and suddenly it's back to working like normal again.

1.13 PM

=======

The files inside /static folder created by SvelteKit is automatically available to all .svelte pages. I can for example load an image from /static/cards/KS.svg as simply as <img src="cards/KS.svg"> [ref: https://stackoverflow.com/questions/74220753/svelte-path-to-a-static-folder]

3.15 PM

=======

I tried using cardJS from https://richardschneider.github.io/cardsJS/hand-layouts.html but none of the hand layouts work inside this project's .svelte pages. I created another .html file (with a 'cards' folder containing all the .svg card files) with the same code, and it works there:

---------------

<link rel="stylesheet" type="text/css" href="https://unpkg.com/cardsJS/dist/cards.min.css" />

<script src="https://ajax.googleapis.com/ajax/libs/jquery/1.11.2/jquery.min.js"></script>

<script src="https://unpkg.com/cardsJS/dist/cards.min.js" type="text/javascript"></script>

<div style="flex">

<div class="hand hhand-compact active-hand">

<img class='card' src='cards/AS.svg'>

<img class='card' src='cards/KS.svg'>

<img class='card' src='cards/QS.svg'>

<img class='card' src='cards/JS.svg'>

<img class='card' src='cards/10S.svg'>

<img class='card' src='cards/9H.svg'>

<img class='card' src='cards/3H.svg'>

<img class='card' src='cards/10D.svg'>

<img class='card' src='cards/4D.svg'>

<img class='card' src='cards/2D.svg'>

<img class='card' src='cards/8C.svg'>

<img class='card' src='cards/7C.svg'>

<img class='card' src='cards/6C.svg'>

</div>

</div>

-------------------

The images themselves load just fine, but the hand layout doesn't work. The cards display vertically in a line, no matter what I do. So, I'm gonna do it myself using flexbox and the library's CSS: https://unpkg.com/cardsJS@1.1.1/dist/cards.css

Useful CSS thing: https://www.w3schools.com/cssref/sel_nth-child.php

Nov 26, 2024 | 3.18 PM | Tuesday

================================

When a User (anyone with an account) creates a room, a Player is automatically created for them. This Player's UID is the room admin's UID.

Nov 27, 2024 | 1.33 PM | Wednesday

===================================

About specifying the location to static assets.. If you're using an image say 4D.svg that's inside the /static/cards folder, you can do 'cards/4D.svg' IF you are inside a .svelte file @ /routes/your_route_here/+page.svelte. If you create another folder inside your first route, say /routes/your_route_here/[slug]/+page.svelte, you're gonna have to go one level up to reference your asset: '../cards/4D.svg'. I experienced this quirk when adding a slug [playeruid] inside the /activegame route.

3.07 PM

=======

Getting your head around the reactivity system in Svelte 5 is not easy. I was trying to update the cards rendered by my DiscardPileOfCards component. The following is the only way I finally came to:

***********

<script lang="ts">

let { cardsStr }: { cardsStr: string } = $props();

let cards: string[] = $derived(cardStrToArray(cardsStr));

function cardStrToArray(cardsStr: string): string[] {

let retval: string[] = [];

let tokens = cardsStr.split(',');

tokens.forEach((token) => {

let trimmedToken = token.trim();

if (trimmedToken != '') retval.push(trimmedToken.toUpperCase());

});

return retval;

}

</script>

<div class="rounded-sm border-2 border-red-500 p-2">

{#each cards as card}

<img class="card tight" src={`../cards/${card}.svg`} />

{/each}

</div>

************

Initially, I had tried to do it without a separate function to convert the card string cardStr to array, and I failed miserably, repeatedly. Also, try to change the $derived() to $state() and it won't react when the prop - cardsStr - changes. The docs really don't give it away, they don't appear when searching on google either.

November 28, 2024 | 4.23 PM | Thursday

=======================================

Ordering the players and their hands around according to their playerId did require some thought. It involved constructing an array of opponents' hands sorted by the playerId.

November 29, 2024 | 12.53 PM | Friday

=======================================

I was returning a Dictionary<integer,integer> from api/game/getplayershandcounts which is playerId:noOfCardsInPlayersHand. I was assuming that after fetching from the client side (in TypeScript) and doing a .json() on the response object, if I assigned it to a Map<number,number>, it would automatically work through maybe a type coercion of some kind, but I was wrong. When the code: for (const [playerId, cardName] of playedCardsDict) {...} tried to run, the browser threw an error saying the object playedCardsDict (which I typed as Map<number,number> but assigned it the result of response.json()) was not iterable. I only later realized that the object was still a plain old javascript object - confirmed via typeof(playedCardsDict) in devtools. I have to manually convert the object to the intended Map<number,number> type before passing around the result of the API request. Ref: https://stackoverflow.com/questions/52607792/typescript-can-not-read-a-mapstring-string-from-json-data <-- this was not easy to find

3.23 PM

=======

Designing the API/backend for a card game without first going through the thought process of making the actual front-end was harder than I imagined, way more than designing the backend for EzRestro for sure. Case in point - the GetLatestPlayInfo() endpoint. Up to now, I haven't made use of it from my front-end. I had anticipated that I would need an endpoint to retrieve the latest state of the active game, hence the endpoint. However, as I fleshed out the interface of the actual game, I felt the need to create separate endpoints for retrieving the number of cards in the players' hands, the actual cards in the current player's hand and the cards in the discard pile, so I ended up actually writing them up in the backend program. Then came the need to know which player was up next - whose turn it is. I reached to modify the GetMyHandAndTurnInfo() endpoint to not just return the isItMyTurn property, but instead a "nextTurnPlayerId". But then I realized that I had the aforementioned endpoint just lying around - GetLatestPlayInfo() - that I had made no use of, and it returned, among other things, whose turn it is next:

public struct TurnInfoShort

{

public int PlayerId { get; set; }

public string PlayerName { get; set; }

public string? PlayedCard { get; set; }

public bool RoundIsOver { get; set; } // clients should request to get the latest score /getscore if this is true

public bool GameIsOver { get; set; } // same here

public int NextTurnPlayerId { get; set; } // needless to say this is moot if game is over

}

I guess originally, I had thought that the state of a game should be maintained on the client-side as far as things like the current discard pile and players' hands are concerned. Despite the fact that each step of the game being recorded in the DB, no endpoint had been initially provided, delegating that responsibility entirely to the front-end. But when I started designing the visual elements of the game, and the logic that goes with it, I naturally and without second thought found myself writing methods for endpoints to retrieve these elements of the game state. That's surprising to me. It's like trying to bring together in one single line, tunnels bored from two opposite sides of a hill. It's not until you meet in the middle that you realize that they don't meet straight. I am guessing it was my failure to anticipate fully what would be required from the front-end to render the game, and all of the elements of its state that led to this mismatch. The mechanics here is more involved than that in the EzRestro app I guess. Not necessarily more involved even, perhaps too many ways to solve the same problem. The current way I'm doing it, I'm retrieving the elements of the game state piecemeal, which requires objectively greater number of API hits and possibly more DB queries. Combining all the state element queries to a single endpoint, which could be my way of thinking when I first coded up the GetLatestPlayInfo() endpoint, would save those extra backend calls. A state update would be required on the front-end when either one of the following happens: a new game is started leading to a CardsHaveBeenDealt() callback from server, a CardHasBeenPlayed() callback from server, loading the active game page. The essential elements of the game state are: which playerId's turn it is, current discard pile cards, current hand count of other players, the player's cards in hand. Besides these, the total number of players in the active game along with their playerId and name, the current game's isActive status, and the current player's details such as the playerId, name and playerUid are the metastate elements of a game. I'm sure a lot of these could be aggregated together in the interest of minimizing backend and DB hits, but it is what it is right now. Perhaps in the future, when the game is functional, I can move on to the optimization and refactoring phase. 4.01 PM

Nov 30, 2024 | 3.42 PM | Saturday

=================================

After PlayCard() is called, it returns the wrong info (stale info). GetPlayedCards() is returning the right info. GetMyHandAndTurn() is returning the right (remaining) hand cards, but nextTurnMine property is wrong(old). GetPlayersHandCounts() is returning the right info. The argument sent via the callback CardHasBeenPlayed(playedCardInfo) is ALSO stale!

I just found out that the assumption I made in writing the following piece of code:

**********

var turnsOfThisGame = await _dbContext.Turns.Where(turn => playerIds.Contains(turn.PlayerId)).Include(turn => turn.Player).ToListAsync();

Turn lastTurn = turnsOfThisGame.Last();

int latestRoundId = lastTurn.RoundId;

**********

is wrong. EF Core doesn't give you entries from the DB in any specific order. I had read about it a few days ago, and explicitly ordered the results of the queries that I wrote then for the final few endpoint actions methods using .OrderBy() and .OrderByDescending(). However, the above code was written maybe a week or so ago, and it wasn't tested either (coz I just finished the front-end yesterday, only after which I started testing all the endpoints in order), hence the wrong result. To obtain the last turn, I would explicitly need to order the result of the DB query by turnId.

After card has been played, the players need to update the discard pile, self hand cards, and (potentially, if the round is over, the scores of all the players)

10.35 PM

========

Display scores (actual/declared) for all players. Only allow to click on a card (including hover effect) if it's our turn, and if all players have already declared scores.

Also, if the current player hasn't declared their score, open a non-intrusive input/modal/dialog asking for a guess.

Dec 1, 2024 | 11.44 PM | Sunday

=================================

After card has been played, if the round is over, animate the discard pile toward the nextPlayerId to show that player won that round.

Dec 2, 2024 | 04.26 PM | Monday

================================

Solving reactivity-related problems in Svelte is fun. It's like solving a puzzle that you know you'll solve at the end. I opted for the Dialog component instead of the Drawer (that I had used to take score declarations) for displaying the scores of players in the games played in a requested room, and the 'open' prop for the component had to be bound to a state, and I had to make use of $effect() with untrack() inside of the component to get the reactivity to work properly inside the component. Apparently, this pattern of programmatically opening or closing i.e. changing the open state of the component is called 'Controlled Usage'. I didn't have to fiddle this much when writing the score declaration code using the Drawer component, but maybe coz that was a once-a-game-per-user thing. Here, I had to make sure to change the component was reacting properly to the change in the roomUid and the openState props when the user asked to show the scores for different rooms.

For the shadcn-svelte components, the "Primitive Component" (the original components on which the shadcn-svelte components are based) docs linked below each component are really enlightening: https://www.bits-ui.com/docs/components/dialog and for the Drawer: https://vaul.emilkowal.ski/default#controlled

Dec 3, 2024 | 1.59 PM | Tuesday

================================

Working with animations/transitions based on reactive props is super confusing.. Sometimes the discard pile moving towards the winner with decreasing opacity wasn't happening - it would just vanish inside the discard pile box. Sometimes. For some reason, the deltaX and deltaY for the translation were being computed as 0, though they should not, given that they're computed based on the location of the discard pile box's id, which is a constant "discard-pile". But the winning player's hand box's id is dynamic "player-{playerId}" this playerId variable is actually the playerId property of a state variable that's an array of the handcard properties for all players (except self) of the game, or it's the playerId property of a state variable that's an object describing the self player. I guessed perhaps the javascript getElementById() wasn't getting the right playerId due to this playerId being invalid during the transient stage of actual updating of the state variable. So I changed the state variable that toggles the animation of the discard pile component to also depend on the aforementioned array state variable containing info on hands of all players of the game:

******

let animateWinnerState: boolean = $derived.by(() => {

let retval = false;

if (

latestPlay.roundIsOver &&

playersInRoom.length > 0 &&

orderedOpponentHandCounts.length > 0

) {

console.log('About to toggle animate on now');

retval = true;

}

return retval;

});

******

apart from the previous dependency on the latestPlay.roundIsOver flag alone, and it started working fine. I also decided to actually call the function to calculate the deltas for the animation instead of tracking it with a state variable of its own:

****

<DiscardPileOfCards

cards={discardPile}

animateFlag={animateWinnerState}

--delY={ComputeAnimationDeltas().y + 'px'}

--delX={ComputeAnimationDeltas().x + 'px'}

onAnimationOver={() => {

discardPile = [];

}}

></DiscardPileOfCards>

****

Then the animation actually looked better. I'm not entirely sure why, but one discard pile card slightly lags behind the other when moving towards the winning player's box. But it happens always except during a page reload, in which case both the cards move together.

5.26 PM

=======

It's just so much easier to use pre-built components with shadcn-svelte rather than trying to roll your own, no matter how easy it seems at the outset. You will definitely spend a lot more time than you previously anticipated writing your own; there's just so many small details with regards to the layout/theme/colors/UX/etc. that need to be consistent with the rest of your app's design and looks. I'm saying this coz I just accomplished using the Drawer component what it took nearly an hour for me to write my own modal dialog - and even then it wasn't behaving exactly the way I wanted it to.

5.50 PM

=======

The new createNewRoomDrawer component doesn't animate when showing, unlike when hiding. Wondering why.. Could it be because I'm using the "Controlled Usage" feature?

11.25 PM

=========

This could just be my newbie self in the space of reactive JS development, but it seems reactivity inherently requires global states, and manipulation of those states from all sorts of places in a component/page. Maintaining a tight dependency order of states can become a nightmare in the context of external API calls with fetch() and the prevalence of promises/async-await based programming that comes with it. I am starting to realize the utility of no-side-effect and globals-unaware pure functions.

December 5, 2024 | 2.47 PM | Thursday

======================================

For four players, the score display dialog box's fixed width (which turns out is applied to the Dialog.Content part of the Dialog component given by shadcn-svelte) of "max-w-lg" was too constraining for the table headings (since they are player names). So, I searched all the project files Ctrl + Shift + F in VS Code for the applied Tailwind classes (found out using the Elements view in devtools) that were to blame for restraining the width of the dialog box component and thus the table: "bg-background fixed left-[50%] top-[50%] z-50 grid w-full max-w-lg" and I changed it to max-w-xl. The exact file is "dialog-content.svelte".

December 6, 2024 | 6.24 PM | Friday

==================================

Guess what, the discard pile cards still sometimes fail to move to the winning player's box, and disappear in place. I've narrowed down the cause to the fact that the players' boxes' <div> elements don't get updated with the correct id since it's assigned dynamically "player-{latestPlayInfo.nextPlayerId}". So, the coordinates to calculate the deltaX and deltaY default to 0,0. This happens because when the latestPlayInfo.roundIsOver property updates, the animation is triggered, which kicks off the computation of the cooordinates. All this happens(sometimes) before the DOM gets updated with the "player-{latestPlayInfo.nextPlayerId}", hence the 0,0 coordinates and the in-place vanishing discard pile cards.

Apparently, there's a tick() lifecycle hook in Svelte that can be used to wait for DOM updates:

****

While there’s no “after update” hook, you can use tick to ensure that the UI is updated before continuing. tick returns a promise that resolves once any pending state changes have been applied, or in the next microtask if there are none.

****

SO post on this topic: https://stackoverflow.com/questions/61295334/understanding-svelte-tick-lifecycle, one of the answers there:

***

<script>

let ref;

let value;

const updateText = async (newValue) => {

value = newValue;

// NOTE: you can't measure the text input immediately

// and adjust the height, you wait for the changes to be applied to the DOM

await tick();

calculateAndAdjustHeight(ref, value);

}

</script>

<textarea bind:this={ref} {value} />

***

Even that didn't do it though.. I was still getting the vanishing-in-place discard pile sometimes. Then I realized that whenever the animationState changes to true (that triggers the animation) my player boxes, my code assumes that the player boxes have already been updated - their playerIds have already been assigned, and so on. But my UpdateGameState() function looked like:

****

function UpdateGameState() {

UpdatePlayerBasicInfo().then((_) => {

UpdateActiveGameExists().then((_) => {

UpdatePlayersInRoom().then((_) => {

UpdateDiscardPile();

UpdatePlayersHandCounts();

UpdateSelfHand();

UpdateLatestPlayInfo();

UpdatePlayersScores();

});

});

});

}

****

The UpdateLatestPlayInfo() call is what triggers the animation by changing the animationState variable. If for some reason, the players' ids have not been established (happens in UpdatePlayersHandCounts(), which is an asynchronous function, meaning it makes fetch() calls to the backend - all the above functions are btw) when the UpdateLatestPlayInfo() method has already started execution, it is possible for the players' boxes' divs' ids' to not be properly assigned since they're reactive: "player-{latestPlayInfo.nextPlayerId}". I then knew that I had to ensure sequential execution of UpdateLatestPlayInfo() _after_ UpdatePlayersHandCounts(). So, I converted UpdatePlayersHandCounts() to a Promise<void> returning function, like I did the functions above it following the same logic that it requires the information gathered by them. So the new UpdateGameState() function looks like:

****

function UpdateGameState() {

UpdatePlayerBasicInfo().then((_) => {

UpdateActiveGameExists().then((_) => {

UpdatePlayersInRoom().then((_) => {

UpdatePlayersHandCounts().then((_) => {

UpdateLatestPlayInfo();

UpdateDiscardPile();

UpdateSelfHand();

UpdatePlayersScores();

});

});

});

});

}

****

Of course I retained the tick() part I had added before to my UpdateLatestPlayInfo() function earlier:

****

function UpdateLatestPlayInfo() {

GetLatestPlay(playerUid).then((payload) => {

if (payload.success) {

latestPlay = payload.data;

if (latestPlay.roundIsOver) {

tick().then(() => {

animateWinnerState = true;

});

} else {

animateWinnerState = false;

}

if (latestPlay.gameIsOver) {

setTimeout(() => {

latestPlay.nextTurnPlayerId = -1; // this is to prevent player boxes from glowing anymore. it's in a timeout coz we want the winner animation to play

alert('Game Over!');

}, 2000);

}

}

});

}

****

I no longer faced the issue again no matter how many times I refreshed the page. Oh, and by the nature of this problem, it should be obvious that this problem, when it occurs, occurs only the first time the page is loaded since that's the only time that the playerbox divs may have invalid playerIds for their id

Dec 9, 2024 | Monday | 02.01 PM

---------------------------------

It was time to Dockerize the backend application. So, I followed the official Microsoft's guide, with a few modifications to arrive at the following Dockerfile to build the image for the backend application:

***

# Ref: https://learn.microsoft.com/en-us/dotnet/core/docker/build-container?tabs=windows#publish-net-app

FROM mcr.microsoft.com/dotnet/sdk:8.0@sha256:35792ea4ad1db051981f62b313f1be3b46b1f45cadbaa3c288cd0d3056eefb83 AS build-env

WORKDIR /App

# Copy everything

COPY . ./

# Restore as distinct layers

RUN dotnet restore

# Build and publish a release

RUN dotnet publish -c Release -o out

# Build runtime image

FROM mcr.microsoft.com/dotnet/aspnet:8.0@sha256:6c4df091e4e531bb93bdbfe7e7f0998e7ced344f54426b7e874116a3dc3233ff

WORKDIR /App

COPY --from=build-env /App/out .

# Expose the port your application is listening on. Not strictly required. dotNET 8.0 defaults to this.

EXPOSE 8080

ENTRYPOINT ["dotnet", "CallBreakBackEnd.dll"]

***

The image can be built as:

docker build -t s0ft/callbreak-api:1.0 -f Dockerfile .

and it can be output as a .tar file as (this was used to import the image using Portainer in my local server):

docker save --output callbreak-api.tar s0ft/callbreak-api:1.0

A quick google search revealed that the EXPOSE 8080 line isn't strictly required - the port mapping can be specified when `docker run`ing the image. Also, it was here that I learned that .net 8.0, and .net as a whole, has a lot of ways to define the URL/Port to listen to serve on. Just do a quick search for "how to set the port for a .net application" and you'll find lots of info on the topic. https://andrewlock.net/8-ways-to-set-the-urls-for-an-aspnetcore-app/ <-- This post lists 8 of them, and the guy has some really good posts for .net

.net 8.0 by default listens on port 8080, and it was a breaking change from previous .net versions. So, I removed the messy urls I had haphazardly created in Properties/launchSettings.json file of my solution. I learned that the file is only ever used in development mode. If nothing else is specified using any of the above listed methods, on Release build, it's going to be listening on port 8080.

After dockerizing the application, I first ran it as "docker run -p 7211:8080 callbreak-api" and it worked fine. So, I created a docker-compose.yml file for it:

***

version: '3.8' # Docker Compose file format version

services:

callbreak-api: # Name of the service

image: callbreak-api # Name of the image

ports:

- "7211:8080" # Map port 7211 on the host to port 8080 in the container

restart: unless-stopped

***

By default, if no url is specified (in .net project itself or when `docker run`ing or when using a compose file), the image binds(listens to) on that port on all network interfaces, which I think is the most common way to host dockerized versions of .net web applications. So, say you have an ubuntu server OS running docker. You can safely deploy the image as a container in this docker environment, and any request to port 7211 to the OS will be received by your app. You only need to know the IP of your ubuntu server OS. I tried it using Postman and it just worked.

The obvious next step was to expose this service to the internet. I ran into CloudFlare Tunnels, localhost.run, Tailscale Funnel and a few other services that make this easy but I finally settled with ngrok. The general principle is the same for all these solutions if I'm not mistaken. It's all just SSH tunnels all the way. You create a tunnel from your machine, where your service is hosted, to their service - which is always fixed (no dynamically changing IP) and typically a domain name they provide. This is either done manually by you using your local instance of SSH, such as in localhost.run, or using readymade applications that basically do the same with a few other bells and whistles. The service providers then create a mapping from a domain name they control to this tunnel on their side. When anyone on the internet makes requests to this domain name, it's magically routed to your local service. I had dug deeper into this topic in the past somewhere in my c0dew0rth blog. But that's the gist of it from what I understand.

With ngrok, I just used their docker image for ngrok agent exactly the way they have it specified on their website:

**

docker pull ngrok/ngrok

docker run --net=host -it -e NGROK_AUTHTOKEN=your_token ngrok/ngrok:latest http --url=your_assigned_static_domain.ngrok-free.app 7211

**

Of course when you have an account with them, all of the above variables will be auto-filled for you (talking about 'your_token' and 'your_assigned_static_domain'). Of course you need to use your 1 free static domain instead of an ephemeral endpoint for 'your_assigned_static_domain' to be truly static.

The last part is the port number to forward all http requests to in your machine. Since we already have our .net application image listening on port 7211, the route an external request takes is as follows:

Internet->ngrok domain->ngrok container running in your docker->host OS for your docker @ port 7211->your .net app container listening on port 7211 on the host OS->your .net app listening on port 8080 inside the container.

So, it all just works!

Of course ngrok had some conditions that had to be met for things to work. Going to the static domain that they give you on your browser shows a default anti-abuse webpage so that users know that a ngrok-enabled routing is happening to some guy's local service somewhere. Whenever a HTTP request is made to this static domain/endpoint from say a browser, in my case my SvelteKit app running in some user's browser, this page is served instead of the actual content to be served by my .net appliation. To circumvent this, the default anti-abuse page lists three ways: either go paid, or send an additional header for each request with the key 'ngrok-skip-browser-warning' having any value (empty strings don't seem to work though, I tried 'dont matter' and it worked, so I stuck with this method), or set a non-standard 'User-Agent' header other than that for the commonly known browsers. So, I had to modify my SvelteKit app accordingly.

As for the client-side i.e. my SvelteKit app itself, I followed the official guide(it's short) to build the app for release mode as an SPA (I think it's called using static adapter to build an SPA or something along those lines), and `npm run build` gave me a 'build' folder that contained the root (or as the docs call it, the 'fallback') html page along with all the static assets (the card SVG's) and all the required compiled and optimized javascript files. As it's a Single Page App, you are also given a handy .htaccess rewrite rule in the docs to reroute all non-existent resource requests to the fallback page for the SvelteKit's router to handle. I just placed the contents of the 'build' folder inside a new subdomain - callbreak.ajashra.com folder in the file system of my hosting provider. I then had to modify my .net application to allow requests from the origin 'https://callbreak.ajashra.com' (http didn't work with Google's Identity Service library for SSO), and not just localhost and its many variants, so that strict CORS wouldn't be a problem for browsers. And ofcourse I also had to add the same entry in my developer console's list of allowed origins.

That's all pretty much. 3.18 PM

I now see why automatic deployments would be an attractive thing to have for large projects. For the smallest of change to my backend, I need to rebuild my .net docker image and re-deploy it on my ubuntu server. For a change in my frontend, I need to rebuild it and re-copy it to my hosting provider's file system.

.png)